Scoring behavioural surveys

Most behavioural surveys are scored "linearly", without taking correlation of answers into account. However, given modern machine learning, we should be able to do much better

I remember one orientation session from when I joined IIMB in 2004. It was taken by our HR Professor, and it was basically a bunch of behavioural questionnaires we had to take in order to “know ourselves better”. There were tens (or even hundreds) of questions in these questionnaires, and then scoring was rather simple - of the nature of “give yourself 1 point for every A, 2 points for every B, and so on”.

In fact, this has been the nature of every other such behavioural questionnaire I’ve taken as well. Through my life (at least as far back as 2002, and at least as recently as a minute ago) I’ve taken questionnaires related to mental health, and all of them are of this nature - each question is evaluated individually and then points are summed in a simple manner to tell you what you are like.

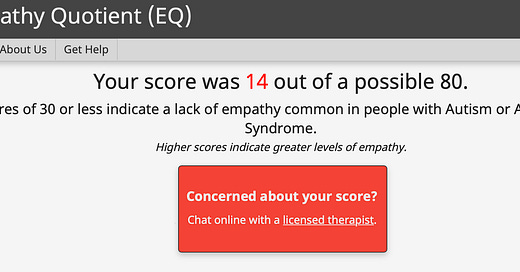

Like right now, I saw this questionnaire about “empathy quotient” on Twitter and had to take it. This is what I got:

Now - given that most such tests are administered electronically, and typically using web tools, I don’t know why scoring has to be so simple. Basically my problem with all these questionnaires is that they are uncorrelated - each answer is scored individually without regard to what you have answered other questions. And I don’t see any reason it needs to be that way.

Consider these questions from the questionnaire I just took now:

2. I prefer animals to humans.

18. When I was a child, I enjoyed cutting up worms to see what would happen.

38. It upsets me to see an animal in pain.

If you think about this, these questions are all highly correlated. If I answer “yes” to both question 38 and question 18, there is something seriously wrong with me (or that I’m a pathological liar) . This is just one example. In a questionnaire of such length, it is fairly easy to spot other such contradictions and pattens. And I think there is far more information in than in a simple “total up the yeses”.

Basically, with the sort of machine learning available nowadays, we should be able to do some simple cluster analysis for groups of seemingly correlated questions to see common patterns in terms of how people answer. Based on this, we can then look at groups of answers together (no need to do all of this manually - a good ML algorithm can take care of this) to provide far superior insight.

The problem with this, of course, is that until we have sufficient data (at least N people who have taken the questionnaire), we are unable to figure out the correlations and patterns in answers. This means the first few people who take the questionnaire either receive simplistic scores (“1 point for every YES” type) or receive their prospective scores well after they have taken the questionnaire (once sufficient data exists to perform the clustering etc.).

I’m running my own company now, but I think scope exists to completely revamp the way HR / behavioural / mental health questionnaires are scored and interpreted.

PS: sometime in life I want to get my hands on raw data from pre-poll surveys. Again my pet peeve with those is that typical news reporting is very “linear” and doesn’t look at the correlation effects. And I think the real value there is in correlations.

Excellent, Karthik. Put differently this is also a problem with many "average" measures. )The average temperature of coffee drunk would ocme out as lukewarm to cool, for example, to parody the isseu). Agree that ML wd be a better way; but they tend learn to weight the answers, (dont they?) to correlate with a desire outcome. Those come with their own issues. Also how do you have an out of model "obekctive" measure of, say, empathy that you can train the model on?

reach out to AxisMyIndia, CSDS and CVoter and offer to do some work for them free that they can take credit for. Next time, insist they share the credit. Avoid going yourself on TV if you don't want to- can do webinars/ podcasts etc. It will be fantastic marketing for Babbage