Some notes on LLMs

I use a framework I'd written about in 2018 to talk about new developments in the world of LLMs, including the o series models

Yesterday, on a metro ride on my way to meeting some old friends, I listened to this podcast episode of Invest Like The Best, featuring Chetan Puttagunta and A Modest Proposal.

In this episode, they talk about some developments in LLMs, and what they mean for technology in general and markets in particular. It is a bit of an old episode (in LLM terms), and I only caught up with it yesterday.

The main point they make in the podcast is that LLMs are plateauing in terms of “pretraining”. While compute capacity continues to go up, and models can have more parameters, they are limited in terms of the availability of publicly available text data to train on.

This is related to something I’d written back in 2018 - the thing with “machine learning” is that the more the number of parameters you have in your model, the more the data you need to “tie down” these parameters for effective learning. This is what I had written:

Such complicated patterns can be identified because the system parameters have lots of degrees of freedom. The downside, of course, is that because the parameters start off having so much freedom, it takes that much more data to “tie them down”. The reason Google Photos can tag you in your baby pictures is partly down to the quantum of image data that Google has, which does an effective job of tying down the parameters. Google Translate similarly uses large repositories of multi-lingual text in order to “learn languages”.

Like most other things in life, machine learning also involves a tradeoff. It is possible for systems to identify complex patterns, but for that you need to start off with lots of “degrees of freedom”, and then use lots of data to tie down the variables. If your data is small, then you can only afford a small number of parameters, and that limits the complexity of patterns that can be detected.

When it comes to LLMs, we usually talk about models in terms of billions of parameters. For example, I have a bunch of ~7-8B parameter LLMs that are running on my local computer, whose help I take while programming (among other things). Meta’s state of the art model has 405B parameters. ChatGPT 4o is of a similar order of magnitude.

While there are reports that a “GPT 5” is in the making at OpenAI, it is interesting that they have gone another way, into the “o” series of models. There was o1, that came a month or two ago, and then on Friday, “o3” got released (apparently, o2 has been skipped thanks to the telecom operator. It is interesting that the Indian investment bank doesn’t seem to have any issues).

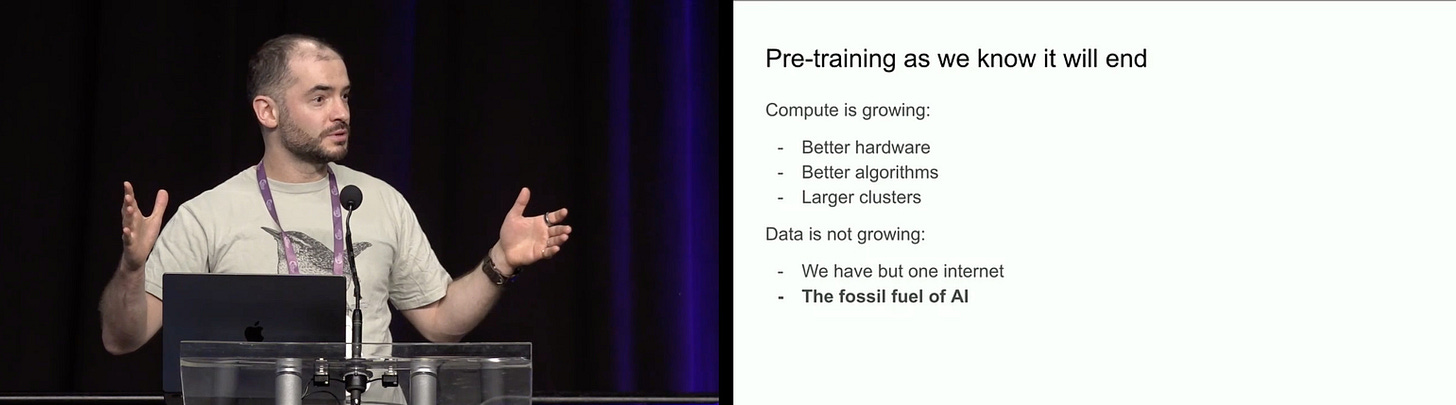

The P in GPT stands for “pretraining”. Recently, estranged OpenAI cofounder Ilya Sutskever gave a talk where he said that pretraining is “hitting a wall in terms of data”. Basically, while it is possible to build models with more parameters and more compute, there is a shortage of data to tie down these additional parameters.

[W]hile it is possible to build models with more parameters and more compute, there is a shortage of data to tie down these additional parameters.

For a while, people tried using “synthetic data” to fill in the gap - basically use existing LLMs to generate more text, and then use that text as further training data. However, as they are (likely belatedly) realising, this is akin to inbreeding. Text generated by existing LLMs will not have sufficient novel information beyond the training data of the said LLMs.

Rephrasing what I’d written six years ago, the more the parameters you have, the more the uncorrelated data you need in order to tie down these parameters and have a good model.

[T]he more the parameters you have, the more the uncorrelated data you need in order to tie down […] parameters and have a good model

Hence there has been a change in strategy in the LLM world. Rather than focussing on pretraining, models are now being built to focus on reasoning. Pretraining improvement requires an increase in uncorrelated text data. In the absence of that, you take what you have as a given, and instead do more work at the time of inferencing (when the user asks the question).

That is what the likes of o1 and o3 do. They use (most likely) the 4o class of models, but instead of giving you a one shot output when you ask a question, they do it multishot. Among other things, they use a generator-verifier framework (which I spoke about in my talk at the Fifth Elephant earlier this year).

The analogy you can think of is - “pure GPT” (up to 4o) is like microwaved food. Food is all pre-prepared. When you want to eat something, you pick a combination from the fridge, microwave them and eat. The “inference led” models (o-series) are like a dark kitchen with professional chefs. There is a large mise-en-place that the chefs can pick from, but then they use their own intelligence and clever combinations and procedures in order to prepare the dish.

There are several implications to this approach.

The same pretraining model can now potentially do more, by doing more work at the time of serving the result. Models can be far more “intelligent” now.

(this is something that Chetan and Modest spoke about in the podcast) The economics of LLMs will change significantly. You will start needing much heavier compute at the inference step, if you choose to use this inference based model. Right now, an ordinary M-series Macbook (I have a base model 14” M1) can inference a 8B parameter model. It is not ultra-quick, but it isn’t slow either. This means that you can realistically get work done by using EC2 with a similar sized GPU.

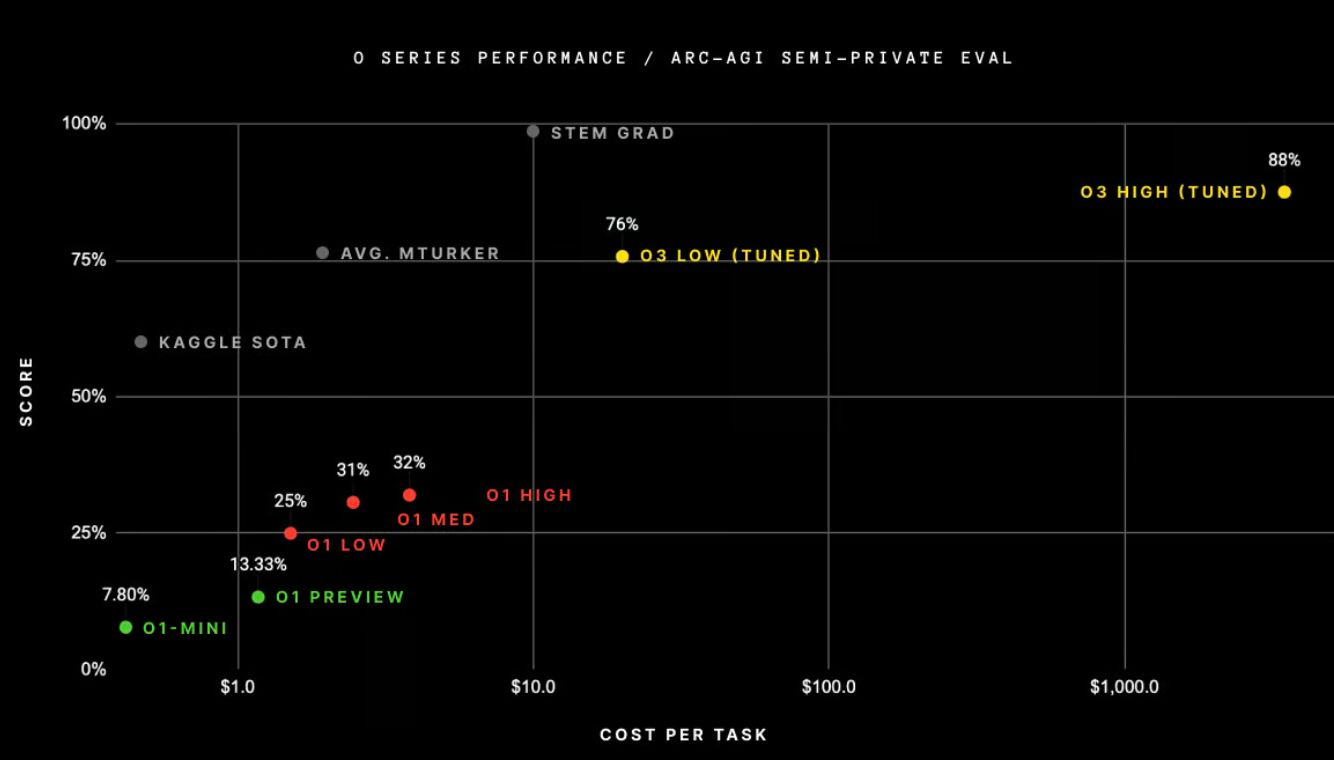

With o-series (or equivalent) models, there is significant work to be done at the time of inferencing, since it’s a multi-shot process (this is why OpenAI restricts the number of o1 calls per day, even for premium users). This means you need orders of magnitude more compute for inference.Like Hariba posted on LinkedIn, the X axis here is on log scale.

So far we have built our company with the assumption that we will be using classic GPT models, where all the work has been done in pretraining, and all the intelligence on the inference side is part of our code base. We are also working on the assumption that we can embed “ordinary LLMs” in the customer’s cloud for the inferencing.

Now we (and other companies building using LLMs) will have to evaluate if we want to continue with GPT-only models, or if we want inference-enhanced models for our work.My prediction is that we will see far more specialisation when it comes to inference-enhanced models. I don’t know if it will be the likes of OpenAI who will do it or if it will be people who are leveraging open source LLMs - you might see a specialist model for coding, a specialist model for editing text, a specialist model for answering children’s questions, and so on.

Now I’m starting to think if what we are building can be thought of as one of these ultra-specialist inference-based LLM outputs.

In the last couple of days, I’ve also seen significant self-flagellation both on generalised social media (aka Twitter / LinkedIn) and on LLM-specific WhatsApp groups I’m part of. “What’s the use of being a programmer any more”, “AGI is here” and things like that.

My immediate reaction to all that is that people read too much dystopian fiction.

My more serious reaction is that “benchmarks” are given too much credit. They are arbitrarily defined problem statements, and not a generic measure of intelligence. I’ll possibly elaborate on that in a separate post.