Using AI to set a quiz

I used ChatGPT rather heavily while setting last weekend's KQA Connections Quiz. I'm documenting some of my processes here.

I hosted a quiz on Sunday. Subrat Mohanty and I were quizmasters for the second edition of the KQA Connections Quiz, based on the Only Connect game show format. The quiz went well, I think, with 15 teams (of a maximum of 4 members each) taking part (that number is slightly down from the 18 that took part in the inaugural edition last year).

As you might expect for a task like this, or for any task in general, in 2025, I used AI heavily while setting my half of this quiz. This is a documentation of some of the processes I used in order to get AI to help me set this quiz.

One key role that AI played in setting this quiz was generating the powerpoint presentation which we used to run the quiz. Creating a powerpoint presentation for an Only Connect style quiz can be cumbersome - putting multiple boxes in a page, putting text in them, resizing, resizing images, and all that. I got a program to do that for me, and I wrote that program using Cursor. I’ll write a separate post on that in due course.

This post is more about creating the content of the quiz using AI.

First, a technical aside.

Generators and Verifiers

I don’t seem to ever tire of this concept. It was a huge part of my talk at last year’s The Fifth Elephant where I’d spoken about Building an AI Data Analyst. And then earlier this year I went to Silicon Valley and found that this concept is part of “conventional wisdom” there.

Anyway I don’t want to get into too much detail here on my personal blog. I’ll just leave a link to my 5el talk.

The basic concept here is about getting LLMs to be creative while curbing their hallucinations. The idea is to use LLMs as “idea generators” and then having more deterministic (this could also be an LLM) “verifiers” that verify if the idea makes sense. And then you implement the idea if and only if the verifier blesses it.

This way, you can have a highly “random” generator (if you think about it, hallucination is just the other side of creativity) that can generate creative ideas, but the “bad creativity” is nipped in the bud by the verifier. This gives you the best of both worlds (I’d compared this solution to a call option in my 5el talk).

Now back to the quiz

Setting an only connect style quiz is no easy business. Each question needs four items that need to be connected (or form a sequence). Sometimes you think of an idea and can get two or three clues. And then searching for a fourth can be a daunting task. You don’t even know where to look for, or what to search. You need help.

The important thing is that conventional google searches can have little impact in such situations - your needs here are too broad, and you need some level of creativity on the part of the agent doing the search for you. This is a situation perfectly set up for LLMs.

Except - remember that they can hallucinate. And this is where you need a sort of verifier process. And the verifier in this case was simple google search - if you ignore the ads and the summaries on top (including the Gemini stuff) clicking through on results of Google searches can give you fairly reliable information.

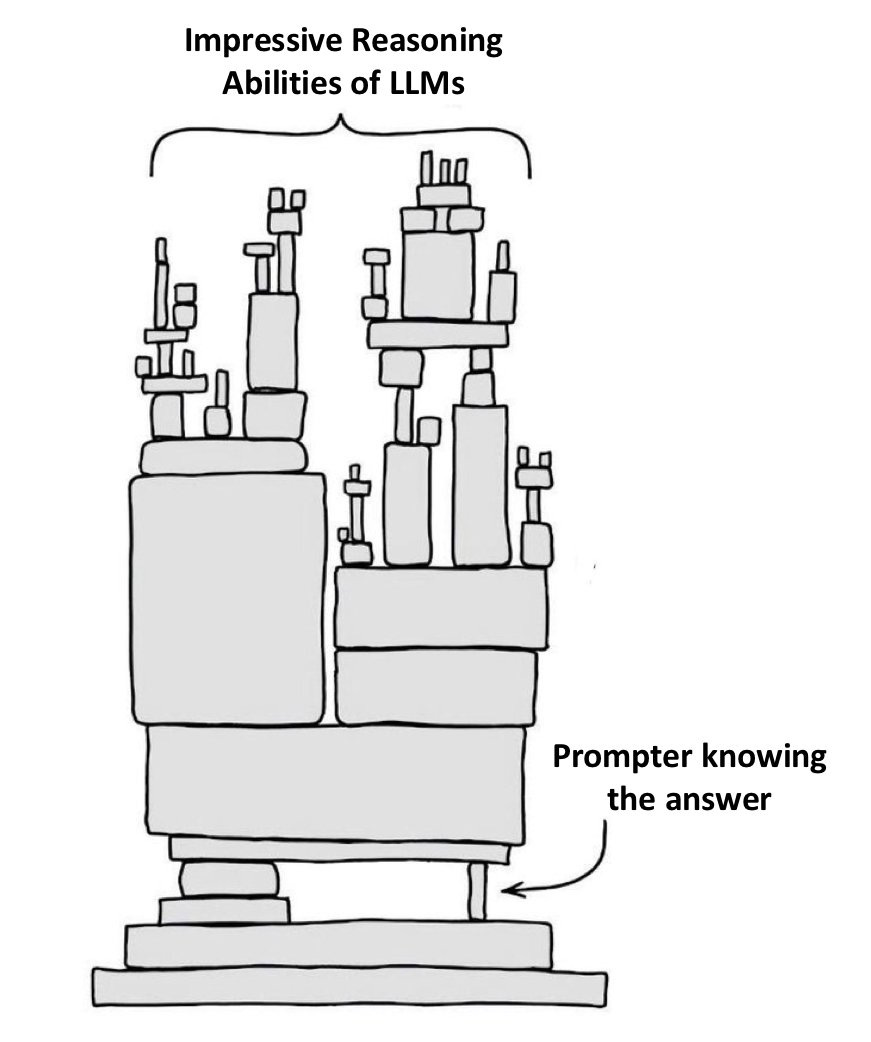

Also, you need to remember that I as a reasonably knowledgeable human was present in the entire process - this wasn’t like the product that I’m building, where we’re building an AI solution without a human in the loop. And this slide from veteran AI scientist Subbarao Kambhampati is relevant here;

Since I was supervising (literally!) the process, it was easy to know where the answer given by the LLM seemed right, and where I needed to verify it using more deterministic searches.

So it was not just a generator-verifier framework i used. It was a generator, a verifier AND ME!

Generating questions

There are a few friends who have requested to play the quiz so I’m not putting the quiz in the public domain yet. So without really going into the specifics, this is how I would use AI to set a question. Actually - let me use a question that we didn’t ultimately use to illustrate how I used LLMs while setting the quiz.

There are some people whose full names are contained in the full names of other people. For example, when right back Kyle Walker moved from Tottenham Hotspur to Manchester City, his place was briefly taken by Kyle Walker Peters (who now plays for Southampton).

If you follow cricket, you know that Neil Harvey Fairbrother was named after Neil Harvey (this is a “Saint Peter” in quizzing circles now, though I remember working it out and answering in one quiz in 2004) - another example of one person’s full name being contained in another (though, strictly speaking, nobody uses Harvey as part of Fairbrother’s name).

Inspired by these two examples (though I didn’t want to use either of these, since I found them too “niche”), I wanted to set a round on “pairs of people where the name of one is a prefix of the name of the other”. I immediately came up with (tabla player) Alla Rakha and (music director) Allah Rakha Rahman. I needed more.

This was the perfect use for ChatGPT (I share a premium account with my cofounder, and he’s an occasional quizzer, so I set the entire quiz using “temporary chats” lest they leak to him). All I had to do was to craft a prompt with the basis of the question and ask it to list several more examples. And list it did.

This wasn’t without hiccups - it randomly cut off people’s names to make them fit. It gave incorrect descriptions of who people are (LLMs are still not fully there when it comes to “name entity recognition”). Etc.

I occasionally had to use domain knowledge. I occasionally had to use Google. I was the verifier here (supported by Google), and that way we could handle ChatGPT’s “downside volatility”, and take advantage of its upside.

Generating clues

For a good only connect style quiz, it’s not enough to come up with the questions. You also need to come up with clues. For example, to clue AR Rahman (as above), I could simply use a picture. But that would make things too obvious. So I need a “clue” to describe him.

I liberally used ChatGPT for this purpose throughout the quiz. Again it was a good generator, and I’d to prompt it a few times in some cases to come up with a good enough clue. Once again it hallucinated - like it identified one Atlanta ‘96 gold medallist as the “Barcelona ‘92 gold medallist”. This happened to be something I had domain knowledge on, so I could recheck using Google and change the clue accordingly.

The other downside of liberally using ChatGPT to generate the clues was that in some questions, the nature of the clues was widely different - something you seldom see in the TV show where the questions are more consistent.

However, overall, the quiz was well appreciated (eg. see below), so I don’t think I should sweat this.

The Wall

And then there was the matter of the connecting wall. We had two of them for the quiz. One was set by Subrat a week earlier, and we had properly vetted it, and shown it to our “online guinea pigs” who vetted the quiz the previous night.

And then there was this wall I came up with while showering on the morning of the quiz.

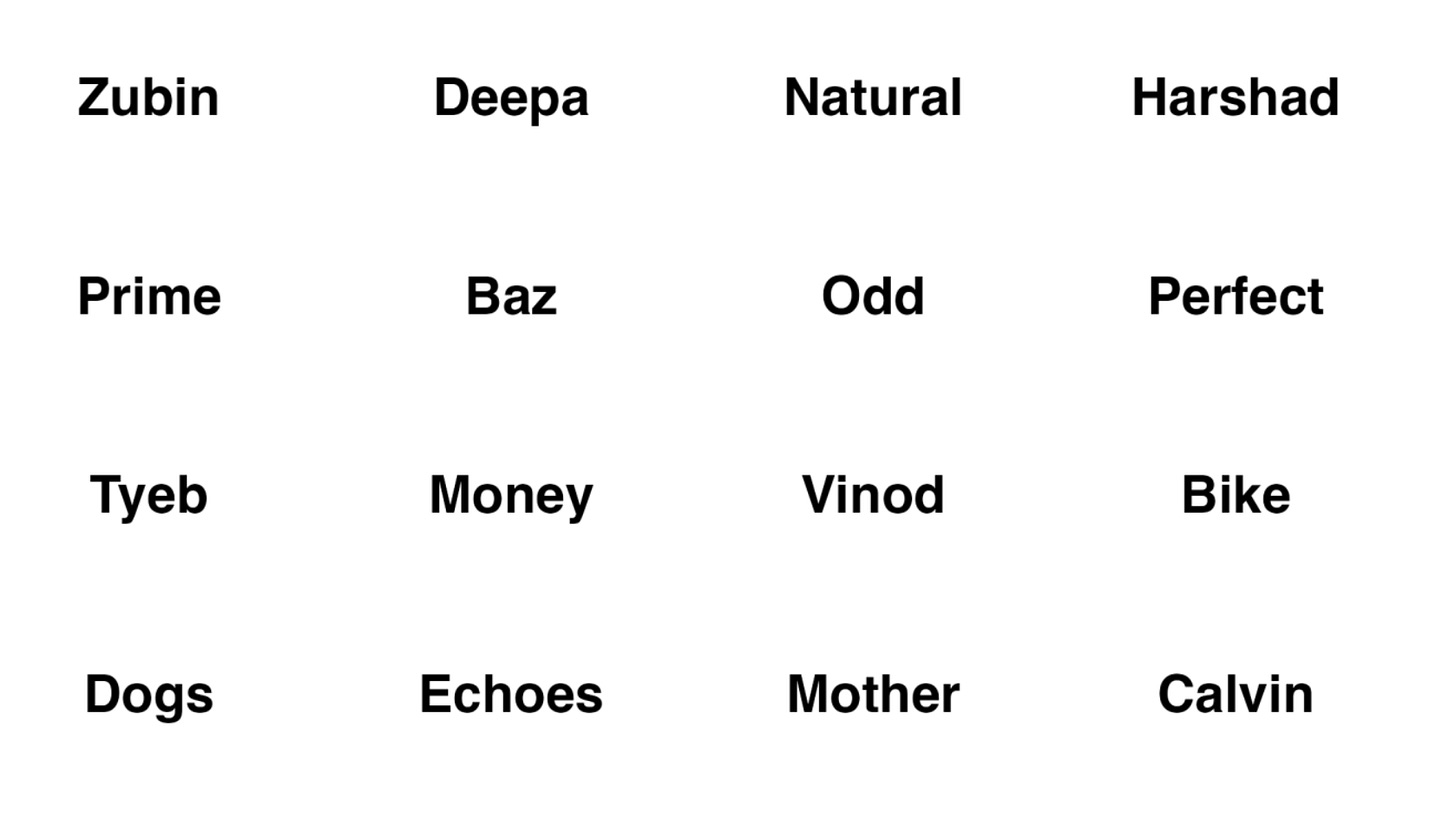

For the uninitiated, the wall round involves a grid of 16 jumbled up clues, which need to be unravelled into 4 logical groups of 4. There might be red herrings (some clues might be part of several groups) but there is only one correct solution. For example, this is a grid we had used in last year’s edition:

Try your hand at it!

Anyway, on the morning of the quiz, while in the shower, I got an idea of a grid. I mentally listed out clues of one category, and then thought of additional clues that could belong to other groups. This way I identified four coherent groups, and now it was time to set the question.

Once again it was ChatGPT to the rescue - I asked it to list examples of each of the groups I wanted to set. And I picked and chose from those to set the grid. By now it was 9am, and the quiz was at 10:30. Subrat was already on his way to the quiz. There was no time to really stress test the question.

I simply gave the grid to ChatGPT 4o and asked it to unscramble it into four logical groups of four. It tried and then got into a right royal mess, and gave an absolutely rubbish answer. Basically the question was too hard for this “basic, one pass” model.

Then I gave the same grid to the “reasoning model” GPT o3. It took some time, did some iterations, and solved the grid perfectly, with perfect explanations on what each group was.

GPT 4o found it too hard. o3 did well on it. That was good enough to tell me I had set a good question, and off I went to conduct the quiz.

Please share the answer for the Wall from last year

Speaking of red herrings, NYT Connections is nasty for this. Reading Zubin immediately takes my mind to Mehta and I start getting confirmation biases (though it seems to be correct answer in this case??) So the first thing I do is shuffle to "reset" my brain.